The long-projected systemic and business transformations that can be brought about by artificial intelligence (AI) technologies have begun. As a result, many companies and their boards have come under scrutiny in recent years for their effectiveness. governance mechanisms and due diligence of opportunities as well as significant risks (financial, regulatory, legal and reputational) posed by AI. The potential issues surrounding AI and associated hyperscale data centers are wide-ranging and span governance, environmental and social aspects. It may be difficult to know whether markets have yet fully priced in some of the large and important issues. risksalongside opportunities. The range of issues from an investor perspective is examined in this article through the lens of recent US shareholder proposals directly related to AI and companies providing AI tools and infrastructure.

A significant number of institutional investors have revealed their expectations and commitments regarding responsible AI (RAI) risk management, respecting long-term financial returns and pragmatic value creation. For example, during a February 2024 investor survey Statement on Ethical AI, the World Benchmarking Alliance Collective Impact Coalition (CIC) for Ethical AI included investors representing over $8.5 trillion in assets under management.

RAI’s leading risk management frameworks strive to standardize due diligence considerations. Many institutional investors have specified their alignment with internationally accepted RAI frameworks, such as the OECD Principles of AI and of UNESCO Recommendation on AI ethics, regarding board accountability, transparency, due diligence and risk management. Additionally, a Banking Policy Institute The April 2024 white paper expresses support for the U.S. Department of Commerce’s policy. NIST AI risk management framework. ISO/IEC 42001:2023 is another widely cited RAI framework, noted for its potential usefulness for compliance with evolving regulatory standards.

There is a growing consensus among many institutional investors globally that effective AI governance is inextricably linked to fiduciary duty, long-term financial management performanceand drivers of sustainable economic growth.

In terms of regulatory compliance, the EUAI Law has an extraterritorial scope, as the scope of the Regulation extends to companies located outside the European Union if their AI activities affect the EU market or individuals within it. There has also been a proliferation of laws passed by the United States. states and others jurisdictions across the world. However, with the speed pace investment and innovation around AI technologies, it is often REMARK that strict mandatory safeguards can discourage innovation and lag behind technological developments. On the other hand, others expressed concern that voluntary disclosure of the principles can encourage superficial compliance without substantive change, which is nothing more than a mere marketing facade. Despite concerns on both sides, regulatory compliance and voluntary frameworks are essential parts of the RAI ecosystem.

In recent years, a number of U.S. companies – primarily in the technology sector, but increasingly in other sectors – have received shareholder proposals requesting increased disclosure of RAI’s policies, procedures and practices relating to board and committee oversight, environmental sustainability and human rights risk mitigation. Issues addressed by the various shareholder proposals included privacy concerns, copyright infringement, energy and water consumption, community and societal impacts, human rights and “just transition” strategies for affected employees, as well as board oversight of these issues. The commercial and economic areas involved are also very varied – ranging from upstream component sourcing, critical minerals supply, geopolitics industrial tensions policy, infrastructure development and financing, and data acquisition, to downstream applications, safety and security issues and waste management. For the purposes of this article, we examined U.S. shareholder proposals that were either explicitly AI-related or implicitly AI-related by being filed with companies that have AI as an important or essential part of their business strategy (collectively, “AI-related” in this article).

AI-related shareholder proposals seen at U.S. companies from 2022 to 2025 have addressed many significant risks and opportunities directly and indirectly related to AI, including:

- Board Oversight

- GHG emissions targets, climate targets and climate transition plans

- Development and production of fossil fuels

- Physical risks of climate change

- Water resources management

- Child safety

- End-use due diligence (surveillance, censorship, conflict and high-risk areas)

- Ethical acquisition and use of data (confidentiality, security, intellectual property)

- Human capital management (bias, discrimination, workplace surveillance, health and safety, automation and other workforce impacts)

- Just a transition to AI

- Misinformation and disinformation

- Targeted advertising

- Weapons development

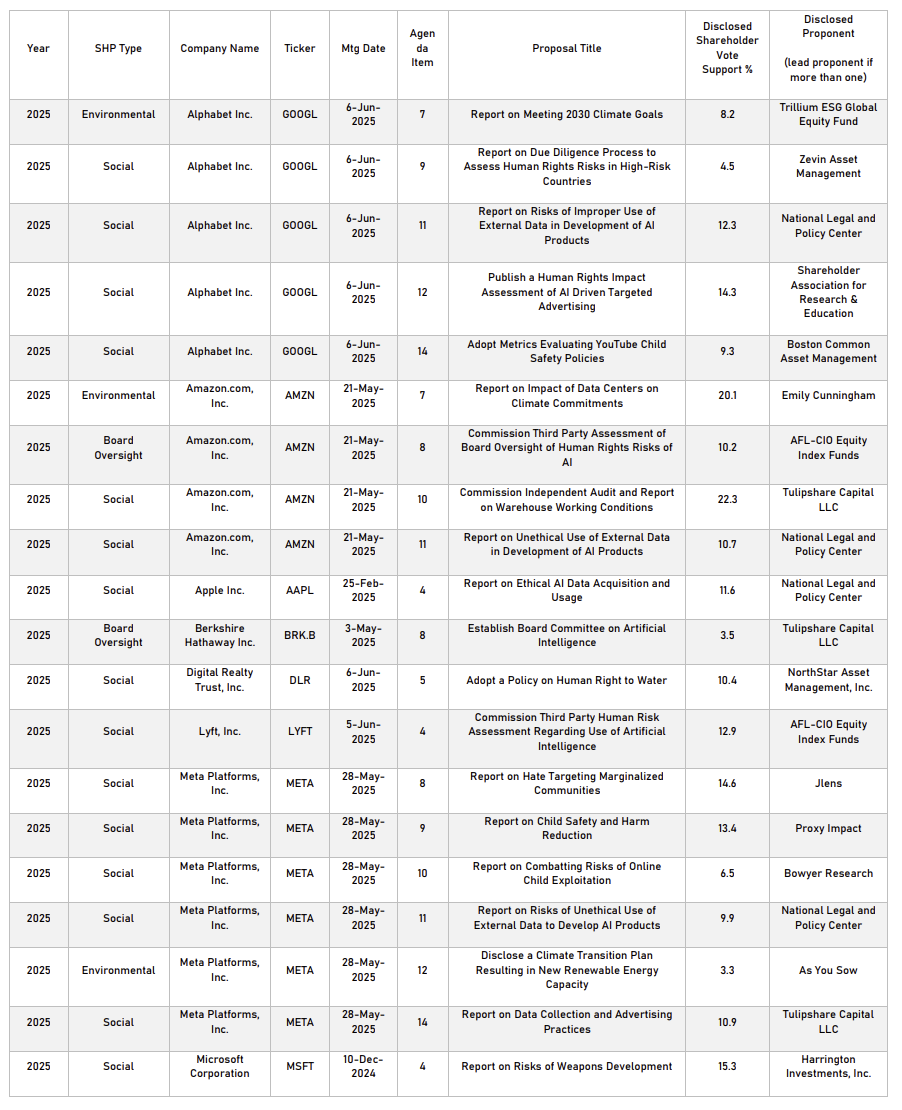

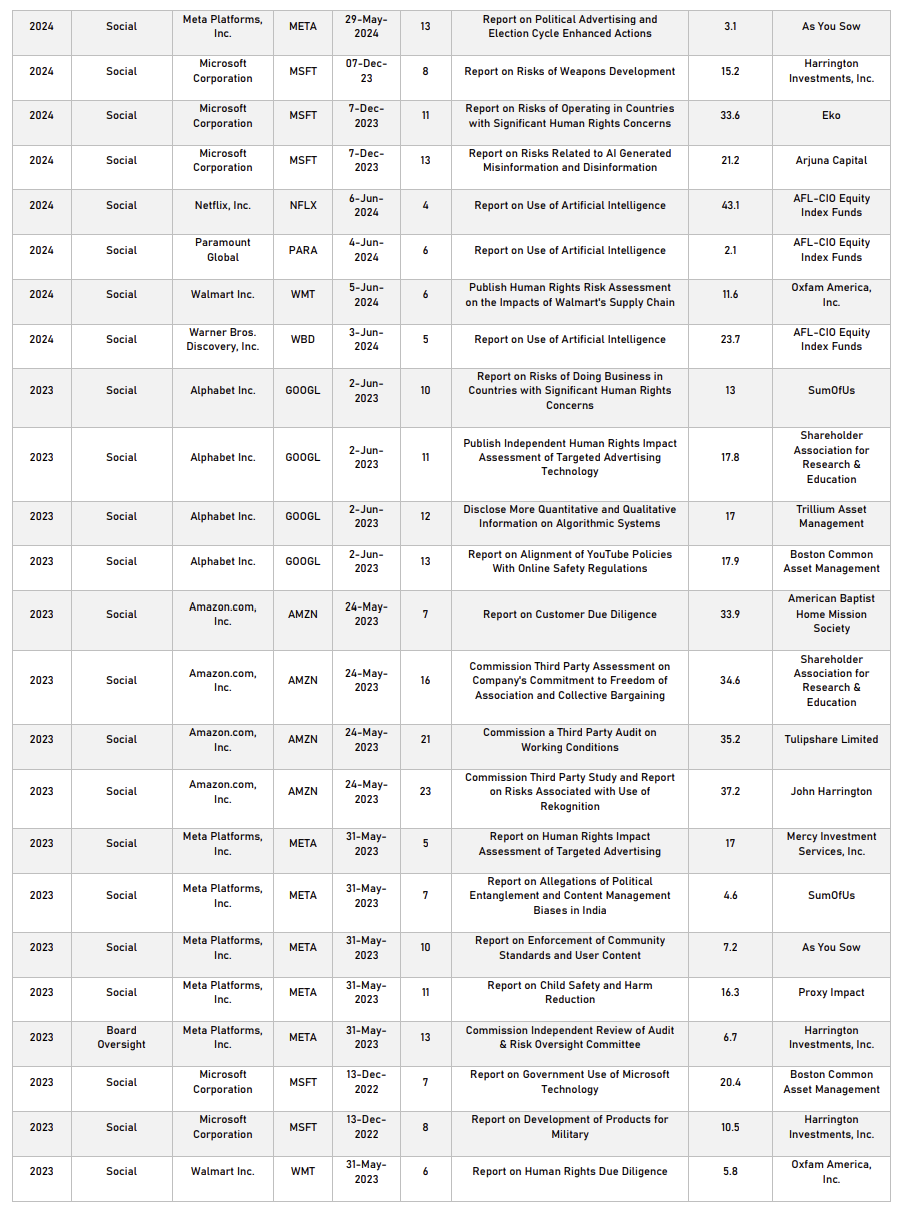

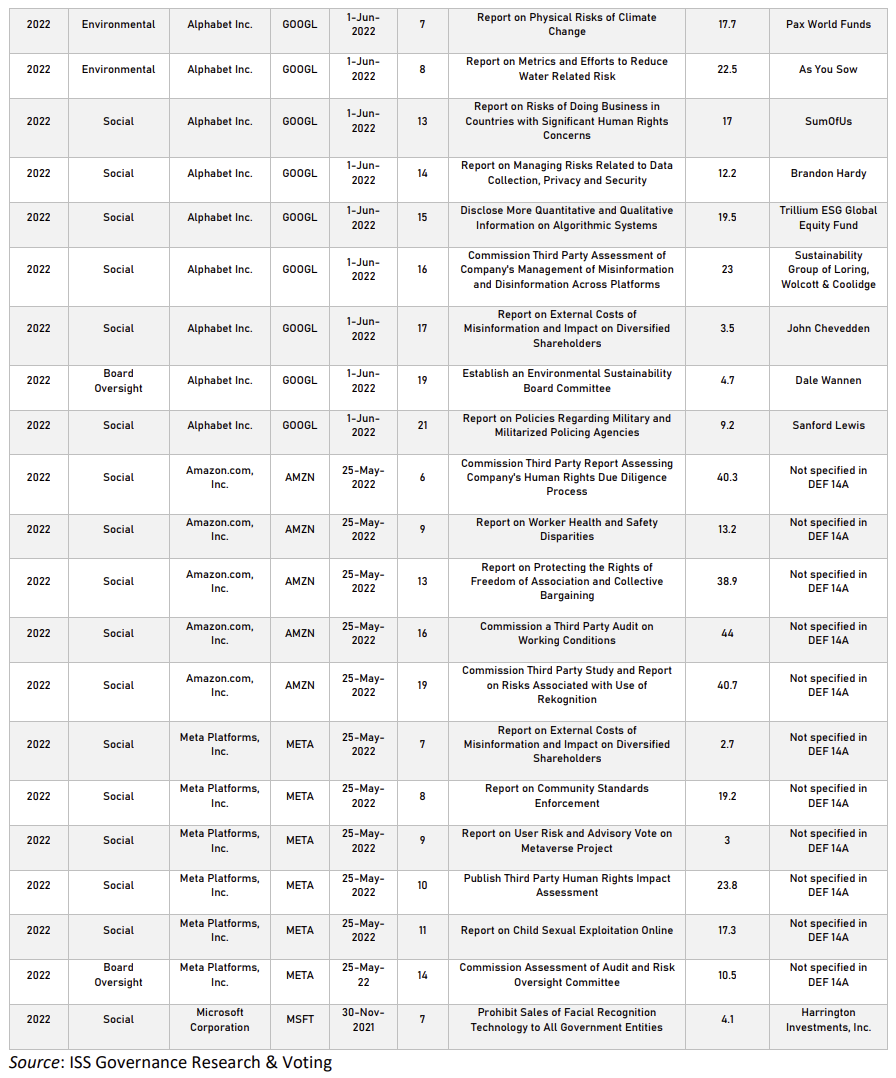

A summary of covered shareholder proposals is presented in the table below.

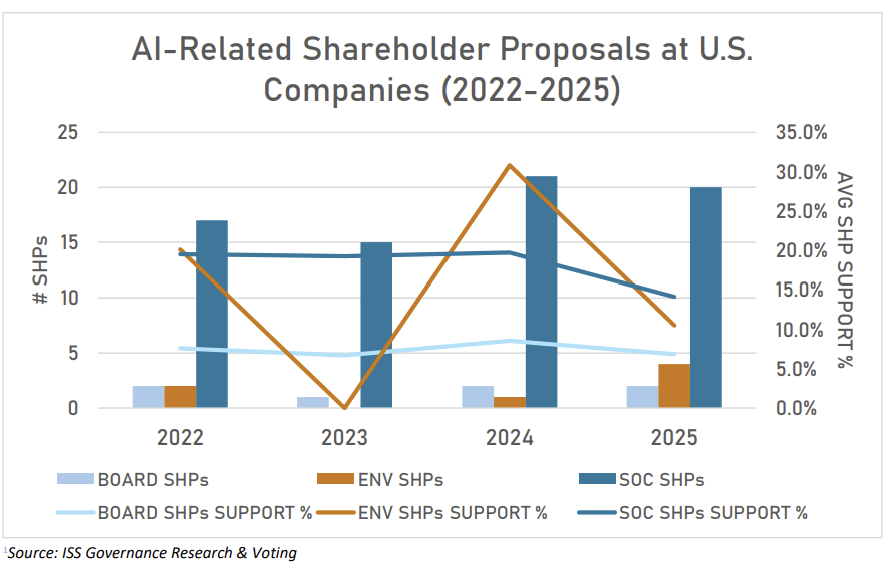

In figures:

During the 2025 U.S. proxy season, there was a significant decrease in the total number of environmental and social shareholder proposals submitted to a vote, in part due to the U.S. Securities and Exchange Commission’s (SEC) release of its Staff Legal Bulletin No. 14M (SLB 14M). However, the overall number of AI-related proposals has not decreased.

Average levels of support for AI-related shareholder proposals followed the recent overall trend in shareholder proposal voting, namely a decline in support for environmental and social proposals in general; however, with the exception of the relatively small number of environmentally focused proposals, support for AI-related proposals has not declined as markedly.

Most AI-related shareholder proposals have addressed “social” issues such as human and labor rights, including child safety, end-use due diligence, data acquisition and use, misinformation and misinformation, targeted advertising, workforce impacts, and more. Each year, proposals related to board oversight regarding AI governance have also been presented, as well as environment-related proposals addressing a range of concerns, including hyperscaler data center issues, increased energy consumption, GHG emissions, water risks, and physical risks related to climate change.

Below is the list of AI-related shareholder proposals up for U.S. corporate voting from 2022 through mid-2025, identified and covered in this document.