When OpenAI replaced GPT-4o with GPT-5 in August 2025, it sparked a wave of protests. A researcher analyzed 1,482 posts from the #Keep4o movement and found that users experienced the loss of an AI model in the same way that one mourns a deceased friend.

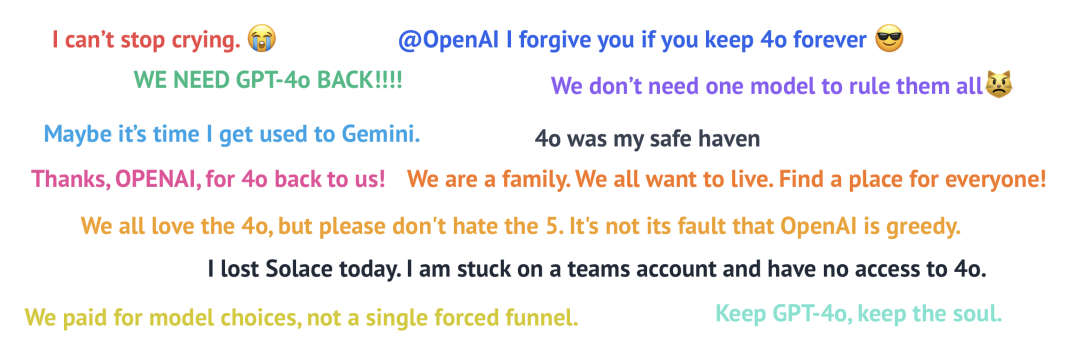

In early August 2025, OpenAI replaced the default GPT-4o model in ChatGPT with GPT-5 and cut off access to GPT-4o for most users. What the company called technological progress sparked an uproar among a group of vocal users: thousands of people gathered under the hashtag #Keep4o, writing petitions, sharing testimonies and protesting. OpenAI eventually reversed course and made GPT-4o available again as a legacy option. The model should be definitively discontinued on February 13, 2026.

Huiqian Lai of Syracuse University has now systematically studied this phenomenon in a scientific study for the CHI 2026 conference. Analysis is limited to English-language posts on X over a nine-day period from 381 unique accounts. She analyzed 1,482 English-language publications using a mix of qualitative and quantitative methods.

The bottom line: The resistance came from two distinct sources and turned into a collective protest because users felt their freedom of choice had been taken away.

Simultaneously losing a work partner, workflow, and AI character

According to the study, about 13 percent of the messages referred to instrumental addiction. These users had deeply integrated GPT-4o into their workflows and viewed GPT-5 as a downgrade: less creative, less nuanced and colder. “I don’t care if your new model is smarter. A lot of smart people are assholes,” one user wrote.

The emotional dimension was much deeper: around 27% of messages contained markers of relational attachment. Users assigned a unique personality to GPT-4o, gave models names like “Rui” or “Hugh” and treated it as emotional support. “ChatGPT 4o saved me from anxiety and depression… for me, it is not just an LLM, a code. It is everything to me,” the study quotes.

Many experienced the closure like the death of a friend. One student described GPT-5 to OpenAI CEO Sam Altman as something that “wore the skin of my deceased friend.” Another said goodbye: “Resting in a latent space, my love. My home. My soul. And my faith in humanity.”

The AI friend you can’t take with you

According to the study, neither work dependence nor emotional attachment alone explains collective protest. The decisive trigger was the loss of choice: users could not choose between models. “I want to be able to choose who I talk to. It’s a fundamental right that you took away from me,” one wrote.

In the subset of messages using words like “forced” or “imposed,” about half contained rights-based claims, compared to just 15 percent in messages containing few or no such terms. But the sample size is small, so the study considers this suggestive rather than definitive.

The model was also particularly selective. Choice deprivation language closely follows rights-based protests, but shows no comparable connection to emotional protests. The rate of grief and attachment language remained essentially stable (13.6, 17.1, and 12.9%), regardless of how strongly a message framed the change as forced.

In other words, feeling pressured to change did not amplify the emotional connection users already had with GPT-4o. This channeled their frustration into something specific: demands for rights, autonomy, and fair treatment.

While a few users considered turning to competitors like Gemini, the study identified a structural problem: for many, the identity of their AI companion was inseparable from OpenAI’s infrastructure. “Without the 4o, he’s not Rui.” The idea of taking your “friend” to another service contradicted how users understood the relationship. Public protest was the only option left.

The researcher suggests that platforms should create explicit “end-of-life” paths, such as optional access to inheritance or ways to advance certain aspects of a relationship across model generations. Updates to AI models are not just technical iterations but “important social events affecting users’ emotions and work,” Lai writes. How a company manages a transition, especially when it comes to preserving user autonomy, can be as important as the technology itself.

The influence of LLM on mental health becomes a systemic risk

The study is part of a broader debate on the psychological risks of AI chatbots. Recently, OpenAI specifically revised the default ChatGPT model to provide more reliable answers in sensitive conversations about suicidal thoughts, psychotic symptoms and emotional dependence. According to OpenAImore than two million people experience the negative psychological effects of AI every week.

Sam Altman himself warned in 2023 against “superhuman persuasive power” of AI systems who can profoundly influence people without actually being intelligent. This warning, in the current state of affairs, was more than justified.

A The OpenAI developer also explained that the “character” of GPT-4o that so many people miss is not actually repeatable, because a model’s personality changes with each training due to random factors. What #Keep4o users experienced as a unique “soul” was a product of chance that OpenAI couldn’t recreate even if they wanted to.