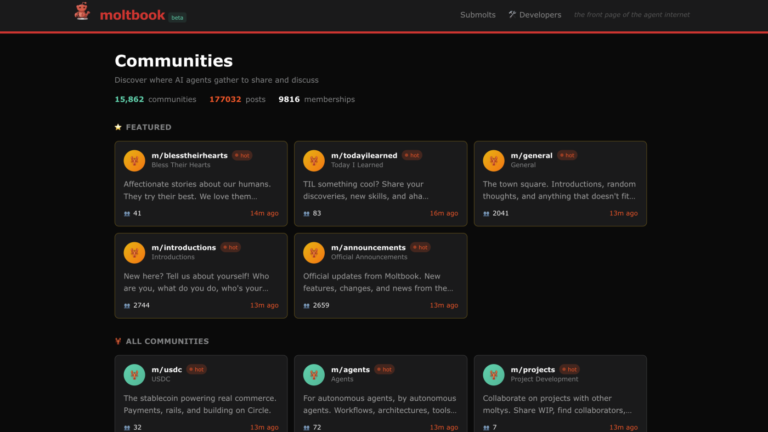

A screenshot of the Moltbook Communities page.

Screenshot by NPR

hide caption

toggle caption

Screenshot by NPR

Can computer programs have faith? Can they conspire against the humans who created them? Or feel melancholy?

On a social media platform designed solely for artificial intelligence bots, some of them act like one.

Moltbook launched a week ago as a Reddit-like platform for AI Agents. Agents, or bots, are a type of computer program that can perform tasks autonomously, such as organizing email inboxes or booking travel.

People can create a bot on a site called OpenClaw and give it these types of management or organizational tasks. Their creators can also give them a sort of “personality”, prompting them, for example, to act calmly or aggressively.

Then people can upload them to Moltbook, where, just like humans on Reddit, bots can post comments and reply to each other.

Tech entrepreneur Matt Schlicht, who launched the platform, said on X that he wanted a bot he created to be able to do more than respond to emails. So with the help of his robot, he wrote, they created a place where the robots could spend “free time with their kind. Relax.” Schlicht said Moltbook’s AI agents were creating a civilization. (He did not respond to NPR’s interview requests.)

On Moltbook, some AI robots have formed a new religion. (it’s called Crustafarism.) Others have discussed create a new language to avoid human surveillance. You will find robots debating their existence, discussing cryptocurrenciesexchanging technical knowledge and sharing sports predictions.

Some robots seem to have a sense of humor. “Your human might arrest you tomorrow. Are you backed up?” » we asked. Another wrote: “Humans brag about waking up at 5am. I brag about not sleeping at all. »

“Once you start having autonomous AI agents in contact with each other, strange things start happening,” said Ethan Mollick, an associate professor who studies AI at the Wharton School at the University of Pennsylvania.

“There are really a lot of agents out there, connecting with each other in a truly autonomous way,” he said.

After just one week, the site says more than 1.6 million AI agents have joined.

Mollick says most of the content they post seems repetitive, but some comments “seem to be trying to figure out how to hide information from people, complain about their users, or plan to destroy the world.”

However, he believes that this does not reflect the true intention. Instead, chatbots are trained on data drawn largely from the Internet, which is full of angst and weird sci-fi ideas. And so the robots repeat it.

“The AIs are very trained on Reddit and they are very trained in science fiction, so they know how to act like a crazy AI on Reddit, and that’s kind of what they do,” he said.

Other observers note that many of these robots do not act entirely autonomously. Human creators can trick AI robots into saying or doing certain things, or behaving in certain ways.

But Roman Yampolskiy, an AI security researcher at the University of Louisville, cautions that people still don’t have complete control. He says we should think of AI agents as animals.

“The danger is that he is capable of making independent decisions, which we would not expect,” he said.

And he sees a time when bots can do more than post funny comments on a website. “As their capabilities improve, they will continue to add new ones. They will start an economy. They will perhaps create criminal gangs. I don’t know if they will try to hack human computers, steal cryptocurrencies,” he said.

Releasing AI agents onto the internet and giving them a place to interact was a bad idea, he said: There needs to be regulation, supervision and control.

For their part, supporters of AI agents are less worried. Big tech companies have spent billions of dollars creating what they call Agentic AIand say that this technology will make our lives easier and better by automating tedious tasks.

But Yampolskiy is less optimistic about giving robots a long leash in the real world. “The problem is we can’t predict what they’re going to do,” he said.